The biggest revolution from Large Language Models (LLMs) is the introduction of fluid reasoning. Instead of the way we're used to working with computers with a rigid set of rules, LLMs are unpredictable and flexible enough to handle a massive variety of complex tasks.

When building products that leverage these models, the way we combine the fluid reasoning with rigid structure is an important problem and opportunity for innovation.

Fluid reasoning opens up a whole world of possibilities. Now we can build products that are better match the way we think, which is a game changer for tasks like processing natural language input and working with meaning instead of words. But we still need rigid reasoning for trust, safety, and reliability.

So, how can we combine the two?

The most common approach now is to... not use rigid reasoning. Looking at the current generation of chatbots shows the power and flexibility of a direct interface with LLMs, but leads to many issues. Like lawyers using ChatGPT for sources or teachers using GPT-3 to catch plagiarism. ChatGPT won't cite its sources or tell you when it's wrong.

There are a lot of important explorations into making LLM responses more reliable: our GPT-4 with Calc project is a great example. While increasing the reliability of LLMs is important, we need to check their reasoning until we can trust them 100%. And even then, we'll want the option to understand their reasoning — to learn, to debug, to improve.

Code Atlas is a light exploration of pushing the LLM to solve problems using a rigid, human-understandable workflow structure.

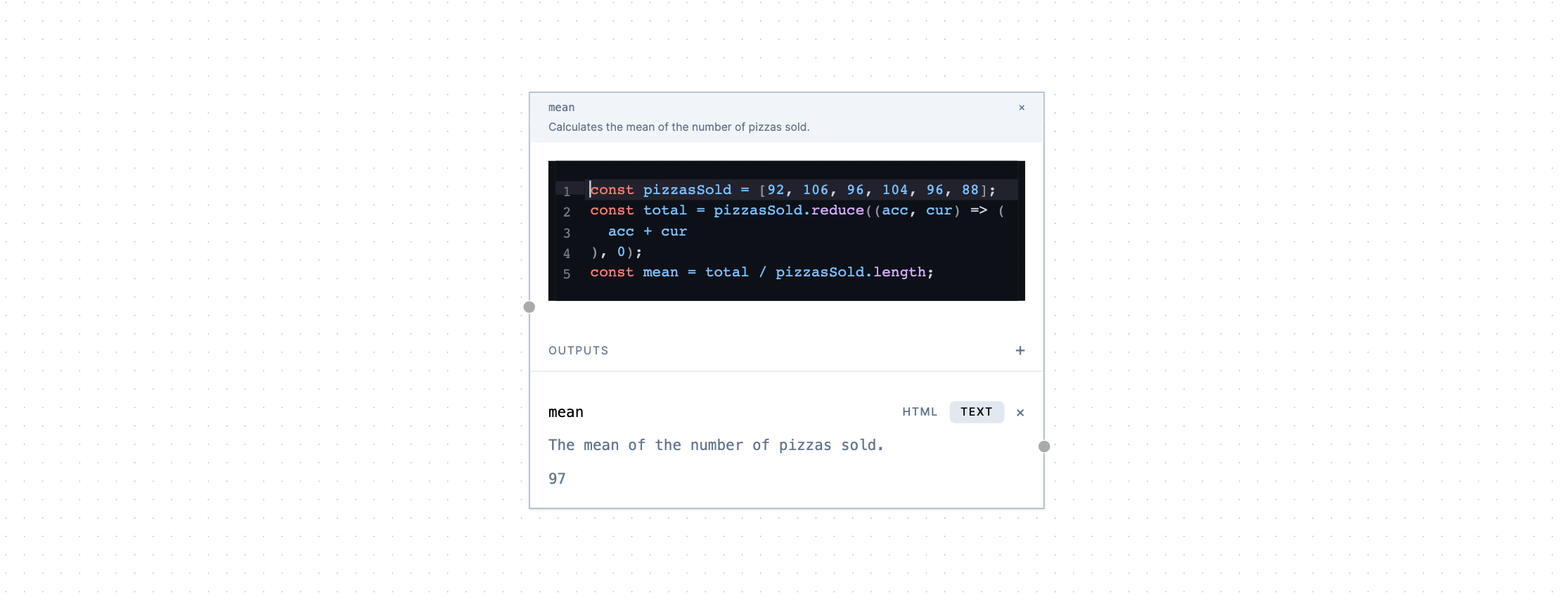

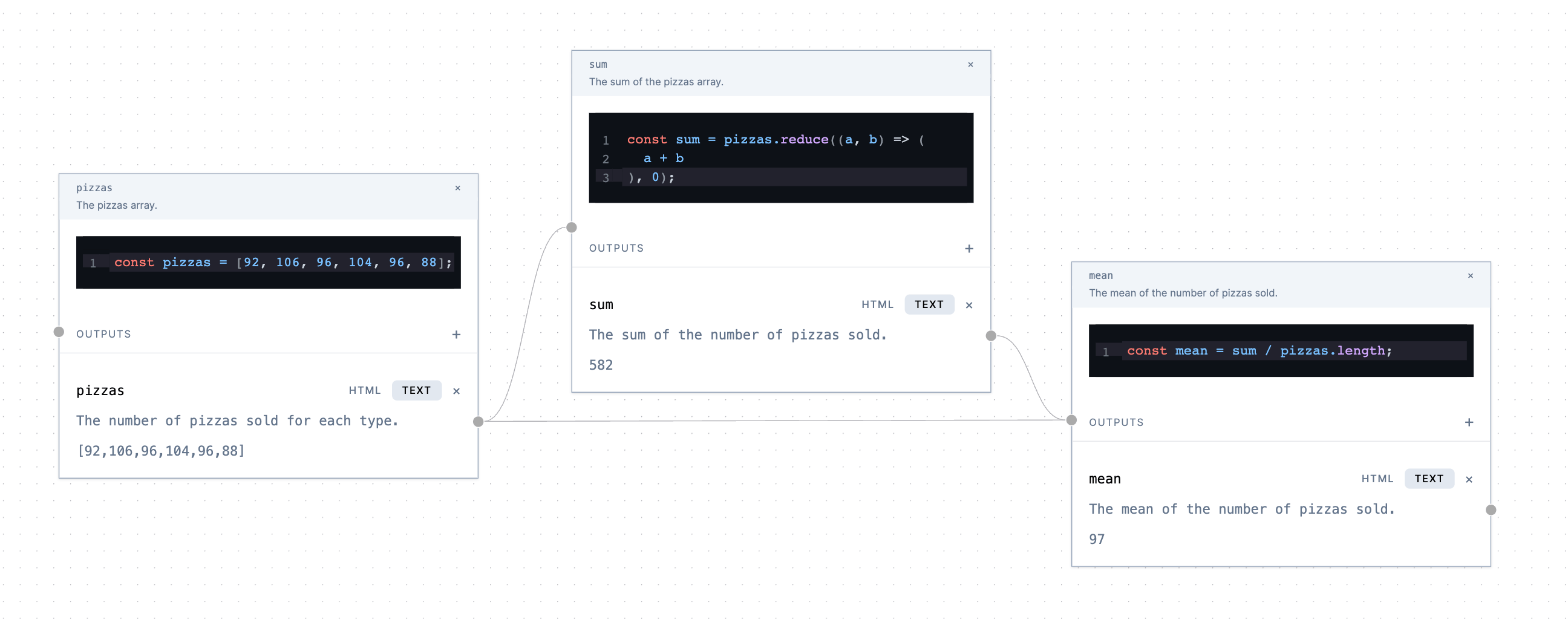

When asked a question, we prompt the model to build a “workflow” out of “code blocks”. Each of these code blocks contains a snippet of Javascript code to execute, and can receive “inputs” and send “outputs” to other blocks.

For example, if we ask the question from the Common Core Sheets:

The model might build a flow like this:

But we can only fit so much information in our heads at once. We also encourage the model to split the logic into bite-sized pieces that we can “read” at a glance.

Because we're encoding the logic in an intermediate data structure, the user can play with it at almost no cost. We added an “input” node type for the model to use that supports several types of input.

Given the same problem, the model can add an input node for each pizza type:

While the focus for this project was on understanding reasoning, we get the additional benefit of more accurate responses. For example, LLMs often struggle with counting letters, which is easy with a code snippet.

Another benefit is the ability to quickly build a workflow to learn from. For example, if we're learning about interest rates, we can ask the model to build a workflow that calculates the interest rate given the principal, monthly payment, and number of payments.

As a general rule, there is so much innovation to be found where AI has dramatically changed the equation. Being able to spin up a new worksheet in seconds is a game changer.

In addition to math problems, we can also use Code Atlas to learn about programming. For example, we can ask the model to build a workflow that draws a spiral of circles in SVG.

Or even a very customizable snowman!

While Code Atlas was just a quick prototype, we're excited to continue to explore ways to combine fluid reasoning with rigid structure. We would love to hear your thoughts! Tweet us at @GitHubNext or send us an email at next@github.com.